Session Chair: Luciana Nedel Federal University of Rio Grande do Sul, Brazil

Virtual Training: Learning Transfer of Assembly Tasks Presentation of Previously Published TVCG Paper

Patrick Carlson, Alicia Peters, Stephen Gilbert, Judy M Vance and Andy Luse

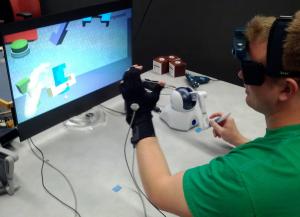

Abstract: In training assembly workers in a factory, there are often barriers such as cost and lost productivity due to shutdown. The use of virtual reality (VR) training has the potential to reduce these costs. This research compares virtual bimanual haptic training versus traditional physical training and the effectiveness for learning transfer. In a mixed experimental design, participants were assigned to either virtual or physical training and trained by assembling a wooden burr puzzle as many times as possible during a twenty minute time period. After training, participants were tested using the physical puzzle and were retested again after two weeks. All participants were trained using brightly colored puzzle pieces. To examine the effect of color, testing involved the assembly of colored physical parts and natural wood colored physical pieces. Spatial ability as measured using a mental rotation test, was shown to correlate with the number of assemblies they were able to complete in the training. While physical training outperformed virtual training, after two weeks the virtually trained participants actually improved their test assembly times. The results suggest that the color of the puzzle pieces helped the virtually trained participants in remembering the assembly process.

Effects of Field of View and Visual Complexity on Virtual Reality Training Effectiveness for a Visual Scanning Task Presentation of Previously Published TVCG Paper

Eric Ragan, Doug Bowman, Regis Kopper, Cheryl Stinson, Siroberto Scerbo and Ryan McMahan

Abstract: Virtual reality training systems are commonly used in a variety of domains, and it is important to understand how the realism of a training simulation influences training effectiveness. We conducted a controlled experiment to test the effects of display and scenario properties on training effectiveness for a visual scanning task in a simulated urban environment. The experiment varied the levels of field of view and visual complexity during a training phase and then evaluated scanning performance with the simulator’s highest levels of fidelity and scene complexity. To assess scanning performance, we measured target detection and adherence to a prescribed strategy. The results show that both field of view and visual complexity significantly affected target detection during training; higher field of view led to better performance and higher visual complexity worsened performance. Additionally, adherence to the prescribed visual scanning strategy during assessment was best when the level of visual complexity during training matched that of the assessment conditions, providing evidence that similar visual complexity was important for learning the technique. The results also demonstrate that task performance during training was not always a sufficient measure of mastery of an instructed technique. That is, if learning a prescribed strategy or skill is the goal of a training exercise, performance in a simulation may not be an appropriate indicator of effectiveness outside of training— evaluation in a more realistic setting may be necessary.

Teaming Up With Virtual Humans: How Other People Change Our Perceptions of and Behavior with Virtual Teammates Long Paper

Andrew Robb, Andrew Cordar, Samsun Lampotang, Casey White, Adam Wendling, Benjamin Lok

Abstract: In this paper we present a study exploring whether the physical presence of another human changes how people perceive and behave with virtual teammates. We conducted a study (n = 69) in which nurses worked with a simulated health care team to prepare a patient for surgery. The agency of participants' teammates was varied between conditions; participants either worked with a virtual surgeon and a virtual anesthesiologist, a human confederate playing a surgeon and a virtual anesthesiologist, or a virtual surgeon and a human confederate playing an anesthesiologist. While participants perceived the human confederates to have more social presence (p < 0.01), participants did not preferentially agree with their human team members. We also observed an interaction effect between agency and behavioral realism. Participants experienced less social presence from the virtual anesthesiologist, whose behavior was less in line with participants' expectations, when a human surgeon was present.

Touch Sensing on Non-Parametric Rear-Projection Surfaces: A Physical-Virtual Head for Hands-On Healthcare Training Short Paper

Jason Hochreiter, Salam Daher, Arjun Nagendran, Laura Gonzalez, Greg Welch

Abstract: We demonstrate a generalizable method for unified multitouch detection and response on a human head-shaped surface with a rear-projection animated 3D face. The method helps achieve hands-on touch-sensitive training with dynamic physical-virtual patient behavior. The method, which is generalizable to other non-parametric rear-projection surfaces, requires one or more infrared (IR) cameras, one or more projectors, IR light sources, and a rear-projection surface. IR light reflected off of human fingers is captured by cameras with matched IR pass filters, allowing for the localization of multiple finger touch events. These events are tightly coupled with the rendering system to produce auditory and visual responses on the animated face displayed using the projector(s), resulting in a responsive, interactive experience. We illustrate the applicability of our physical prototype in a medical training scenario.

10:30 - 12:05 | Scouting Virtual Worlds

Session Chair: Eric Hodgson Miami University, United States

Going through, going around: a study on individual avoidance of groups Long Paper

Julien Bruneau, Anne-Hélène Olivier, Julien Pettré

Abstract: When avoiding a group, a walker has two possibilities: either he goes through it or around it. Going through very dense groups or around huge ones would not seem natural and could break any sense of presence in a virtual environment. This paper aims to enable crowd simulators to handle such situations correctly. To this end, we need to understand how real humans decide to go through or around groups. As a first hypothesis, we apply the Principle of Minimum Energy (PME) on different group sizes and density. According to this principle, a walker should go around small and dense groups whereas he should go through large and sparse groups. Such principle has already been used for crowd simulation; the novelty here is to apply it to decide on a global avoidance strategy instead of local adaptations only.Our study quantifies decision thresholds. However, PME leaves some inconclusive situations for which the two solutions paths have similar energetic costs. In a second part, we propose an experiment to corroborate PME decisions thresholds with real observations. As controlling the factors of an experiment with many people is extremely hard, we propose to use Virtual Reality as a new method to observe human behavior. This work represent the first crowd simulation algorithm component directly designed from a VR-based study. We also consider the role of secondary factors in inconclusive situations. We show the influence of the group appearance and direction of relative motion in the decision process. Finally, we draw some guidelines to integrate our conclusions to existing crowd simulators and show an example of such integration. We evaluate the achieved improvements.

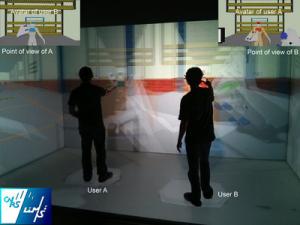

Virtual Proxemics: Locomotion in the Presence of Obstacles in Large Immersive Projection Environments Short Paper

Fernando Argelaguet Sanz, Anne-Hélène Olivier, Gerd Bruder, Julien Pettré, Anatole Lécuyer

Abstract: In this paper, we investigate obstacle avoidance behavior during real walking in a large immersive projection setup. We analyze the walking behavior of users when avoiding real and virtual static obstacles. In order to generalize our study, we consider both anthropomorphic and inanimate objects, each having his virtual and real counterpart. The results showed that users exhibit different locomotion behaviors in the presence of real and virtual obstacles, and in the presence of anthropomorphic and inanimate objects. Precisely, the results showed a decrease of walking speed as well as an increase of the clearance distance (i.e., the minimal distance between the walker and the obstacle) when facing virtual obstacles compared to real ones. Moreover, our results suggest that users act differently due to their perception of the obstacle: users keep more distance when the obstacle is anthropomorphic compared to an inanimate object and when the orientation of anthropomorphic obstacle is from the profile compared to a front position. We discuss implications on future large shared immersive projection spaces.

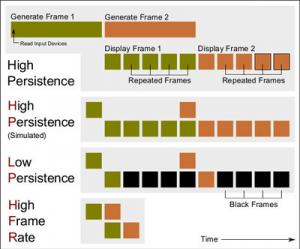

The Effect of Visual Display Properties and Gain Presentation Mode on the Perceived Naturalness of Virtual Walking Speeds Short Paper

Niels Christian Nilsson, Stefania Serafin, Rolf Nordahl

Abstract: Individuals tend to find realistic walking speeds too slow when relying on treadmill walking or Walking-In-Place (WIP) techniques for virtual travel. This paper details three studies investigating the effects of visual display properties and gain presentation mode on the perceived naturalness of virtual walking speeds: The first study compared three different degrees of peripheral occlusion; the second study compared three different degrees of perceptual distortion produced by varying the geometric field of view (GFOV); and the third study compared three different ways of presenting visual gains. All three studies compared treadmill walking and WIP locomotion. The first study revealed no significant main effects of peripheral occlusion. The second study revealed a significant main effect of GFOV, suggesting that the GFOV size may be inversely proportional to the degree of underestimation of the visual speed. The third study found a significant main effect of gain presentation mode. Allowing participants to interactively adjust the gain led to a smaller range of perceptually natural gains and this approach was significantly faster. However, the efficiency may come at the expense of confidence. Generally the lower and upper bounds of the perceptually natural speeds were higher for treadmill walking than WIP. However, not all differences were statistically significant.

Applying Latency to Half of a Self-Avatar's Body to Change Real Walking Patterns Short Paper

Gayani Samaraweera, Alex Perdomo, John Quarles

Abstract: Latency (i.e., time delay) in a Virtual Environment is known to disrupt user performance, presence and induce simulator sickness. However, can we utilize the effects caused by experiencing latency to benefit virtual rehabilitation technologies? We investigate this question by conducting an experiment that is aimed at altering gait by introducing latency applied to one side of a self-avatar with a front-facing mirror. This work was motivated by previous findings where participants altered their gait with increasing latency, even when participants failed to notice considerably high latencies as 150ms or 225ms. In this paper, we present the results of a study that applies this novel technique to average healthy persons (i.e., to demonstrate the feasibility of the approach before applying it to persons with disabilities). The results indicate a tendency to create asymmetric gait in persons with symmetric gait when latency is applied to one side of their self-avatar. Thus, the study shows the potential of applying one-sided latency in a self-avatar, which could be used to develop asymmetric gait rehabilitation techniques.

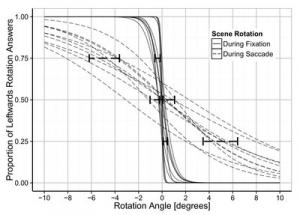

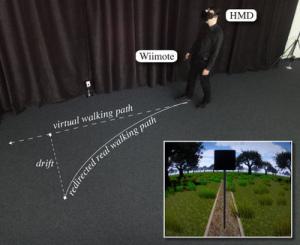

Cognitive Resource Demands of Redirected Walking Long Paper

Gerd Bruder, Paul Lubos, Frank Steinicke

Abstract: Redirected walking allows users to walk through a large-scale immersive virtual environment (IVE) while physically remaining in a reasonably small workspace. Therefore, manipulations are applied to virtual camera motions so that the user's self-motion in the virtual world differs from movements in the real world. Previous work found that the human perceptual system tolerates a certain amount of inconsistency between proprioceptive, vestibular and visual sensation in IVEs, and even compensates for slight discrepancies with recalibrated motor commands. Experiments showed that users are not able to detect an inconsistency if their physical path is bent with a radius of at least 22 meters during virtual straightforward movements. If redirected walking is applied in a smaller workspace, manipulations become noticeable, but users are still able to move through a potentially infinitely large virtual world by walking. For this semi-natural form of locomotion, the question arises if such manipulations impose cognitive demands on the user, which may compete with other tasks in IVEs for finite cognitive resources. In this article we present an experiment in which we analyze the mutual influence between redirected walking and verbal as well as spatial working memory tasks using a dual-tasking method. The results show an influence of redirected walking on verbal as well as spatial working memory tasks, and we also found an effect of cognitive tasks on walking behavior. We discuss the implications and provide guidelines for using redirected walking in virtual reality laboratories.

13:45 - 15:00 | HMD Calibration

Session Chair: Holger Regenbrecht University of Otago, New Zealand

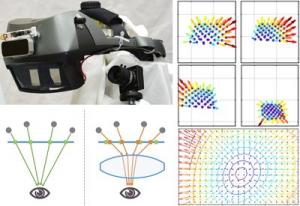

Light-Field Correction for Spatial Calibration of Optical See-Through Head-Mounted Displays Long Paper

Yuta Itoh, Gudrun Klinker

Abstract: A critical requirement for AR applications with Optical See-ThroughHead-Mounted Displays (OST-HMD) is to project 3D information correctly into the current viewpoint of the user -- more particularly, according to the user's eye position. Recently-proposed interaction-free calibration methods automatically estimate this projection by tracking the user's eye position, thereby freeing users from tedious manual calibrations. However, the method is still prone to contain systematic calibration errors. Such errors stem from eye-/HMD-related factors and are not represented in the conventional eye-HMD model used for HMD calibration. This paper investigates one of these factors -- the fact that optical elements of OST-HMDs distort incoming world-light rays before they reach the eye, just as corrective glasses do. Any OST-HMD requires an optical element to display a virtual screen. Each such optical element has different distortions. Since users see a distorted world through the element, ignoring this distortion degenerates the projection quality. We propose a light-field correction method, based on a machine learning technique, which compensates the world-scene distortion caused by OST-HMD optics. We demonstrate that our method reduces the systematic error and significantly increases the calibration accuracy of the interaction-free calibration.

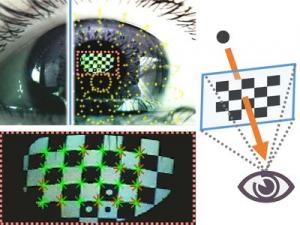

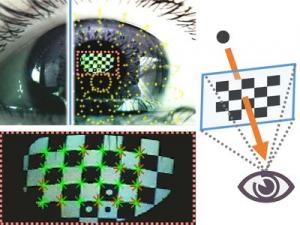

Corneal-Imaging Calibration for Optical See-Through Head-Mounted Displays Long Paper

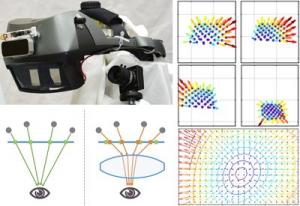

Alexander Plopski, Yuta Itoh, Christian Nitschke, Kiyoshi Kiyokawa, Gudrun Klinker, Haruo Takemura

Abstract: In recent years optical see-through head-mounted displays (OST-HMDs) have moved from conceptual research to a market of mass-produced devices with new models and applications being released continuously. It remains challenging to deploy augmented reality (AR) applications that require consistent spatial visualization. Examples include maintenance, training and medical tasks, as the view of the attached scene camera is shifted from the user's view. A calibration step can compute the relationship between the HMD-screen and the user's eye to align the digital content. However, this alignment is only viable as long as the display does not move, an assumption that rarely holds for an extended period of time. As a consequence, continuous recalibration is necessary. Manual calibration methods are tedious and rarely support practical applications. Existing automated methods do not account for user-specific parameters and are error prone. We propose the combination of a pre-calibrated display with a per-frame estimation of the user's cornea position to estimate the individual eye center and continuously recalibrate the system. With this, we also obtain the gaze direction, which allows for instantaneous uncalibrated eye gaze tracking, without the need for additional hardware and complex illumination. Contrary to existing methods, we use simple image processing and do not rely on iris tracking, which is typically noisy and can be ambiguous. Evaluation with simulated and real data shows that our approach achieves a more accurate and stable eye pose estimation, which results in an improved and practical calibration with a largely improved distribution of projection error.

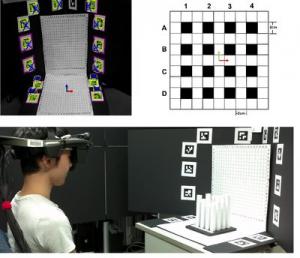

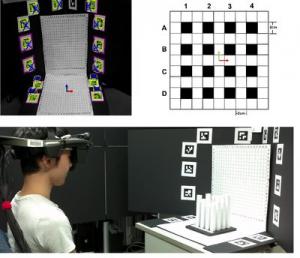

Subjective Evaluation of a Semi-Automatic Optical See-Through Head-Mounted Display Calibration Technique Long Paper

Kenneth Moser, Yuta Itoh, Kohei Oshima, Edward Swan, Gudrun Klinker, Christian Sandor

Abstract: With the growing availability of optical see-through (OST) head-mounted displays (HMDs), there is a present need for robust, uncomplicated, and automatic calibration methods suited for non-expert users. This work presents the results of a user study which both objectively and subjectively examines registration accuracy produced by three OST HMD calibration methods: (1) SPAAM, (2) Degraded SPAAM, and (3) Recycled INDICA, a recently developed semi-automatic calibration method. Accuracy metrics used for evaluation include subject provided quality values and error between perceived and absolute registration coordinates. Our results show all three calibration methods produce very accurate registration in the horizontal direction but caused subjects to perceive the distance of virtual objects to be closer than intended. Surprisingly, the semi-automatic calibration method produced more accurate registration vertically and in perceived object distance overall. User assessed quality values were also the highest for Recycled INDICA, particularly when objects were shown at distance. The results of this study confirm that Recycled INDICA is capable of producing equal or superior on-screen registration compared to common OST HMD calibration methods. We also identify a potential hazard in using reprojection error as a quantitative analysis technique to predict registration accuracy. We conclude with discussing the further need for examining INDICA calibration in binocular HMD systems, and the present possibility for creation of a closed-loop continuous calibration method for OST Augmented Reality.

15:30 - 16:30 | Displays

Session Chair: Hiroyuki Kajimoto The University of Electro-Communications, Japan

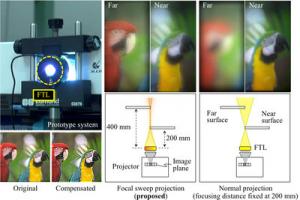

Extended Depth-of-Field Projector by Fast Focal Sweep Projection Long Paper

Daisuke Iwai, Shoichiro Mihara, Kosuke Sato

Abstract: A simple and cost-efficient method for extending a projector's depth-of-field (DOF) is proposed. By leveraging liquid lens technology, we can periodically modulate the focal length of a projector at a frequency that is higher than the critical flicker fusion (CFF) frequency. Fast periodic focal length modulation results in forward and backward sweeping of focusing distance. Fast focal sweep projection makes the point spread function (PSF) of each projected pixel integrated over a sweep period (IPSF; integrated PSF) nearly invariant to the distance from the projector to the projection surface as long as it is positioned within sweep range. This modulation is not perceivable by human observers. Once we compensate projection images for the IPSF, the projected results can be focused at any point within the range. Consequently, the proposed method requires only a single offline PSF measurement; thus, it is an open-loop process. We have proved the approximate invariance of the projector's IPSF both numerically and experimentally. Through experiments using a prototype system, we have confirmed that the image quality of the proposed method is superior to that of normal projection with fixed focal length. In addition, we demonstrate that a structured light pattern projection technique using the proposed method can measure the shape of an object with large depth variances more accurately than normal projection techniques.

Robust High-speed Tracking against Illumination Changes for Dynamic Projection Mapping Short Paper

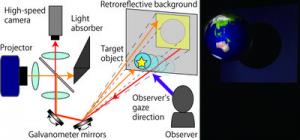

Tomohiro Sueishi, Hiromasa Oku, Masatoshi Ishikawa

Abstract: Dynamic Projection Mapping, projection-based AR for a moving object without misalignment by a high-speed optical axis controller by rotational mirrors, has a trade-off between stability of high-speed tracking and high visibility for a variety of projection content. In this paper, a system that will provide robust high-speed tracking without any markers on objects against illumination changes, including projected images, is realized by introducing a retroreflective background with the optical axis controller for Dynamic Projection Mapping. Low-intensity episcopic light is projected with Projection Mapping content, and the light reflected from the background is sufficient for high-speed cameras but is nearly invisible to observers. In addition, we introduce adaptive windows and peripheral weighted erosion to maintain accurate high-speed tracking. Under low light conditions, we examined the visual performance of diffuse reflection and retroreflection from both camera and observer viewpoints. We evaluated stability relative to illumination and disturbance caused by non-target objects. Dynamic Projection Mapping with partially well-lit content in a low-intensity light environment is realized by our proposed system.

A Distributed Memory Hierarchy and Data Management for Interactive Scene Navigation and Modification on Tiled Display Walls Presentation of Previously Published TVCG Paper

Duy-Quoc Lai, Behzad Sajadi, Shan Jiang, Aditi Majumder,M. Gopi

Abstract: Simultaneous modification and navigation of massive 3D models are difficult because repeated data edits affect the data layout and coherency on a secondary storage, which in turn affect the interactive out-of-core rendering performance. In this paper, we propose a novel approach for distributed data management for simultaneous interactive navigation and modification of massive 3D data using the readily available infrastructure of a tiled display. Tiled multi-displays, projection or LCD panel based, driven by a PC cluster, can be viewed as a cluster of storage-compute-display (SCD) nodes. Given a cluster of SCD node infrastructure, we first propose a distributed memory hierarchy for interactive rendering applications. Second, in order to further reduce the latency in such applications, we propose a new data partitioning approach for distributed storage among the SCD nodes that reduces the variance in the data load across the SCD nodes. Our data distribution method takes in a data set of any size, and reorganizes it into smaller partitions, and stores it across the multiple SCD nodes. These nodes store, manage, and coordinate data with other SCD nodes to simultaneously achieve interactive navigation and modification. Specifically, the data is not duplicated across these distributed secondary storage devices. In addition, coherency in data access, due to screen-space adjacency of adjacent displays in the tile, as well as object space adjacency of the data sets, is well leveraged in the design of the data management technique. Empirical evaluation on two large data sets, with different data density distribution, demonstrates that the proposed data management approach achieves superior performance over alternative state-of-the-art methods.

Friday March 27th

8:30 - 9:45 | Simulation and Rendering

Session Chair: Mathias Harders University of Innsbruck, Austria

WAVE: Interactive Wave-based Sound Propagation for Virtual Environments Long Paper

Ravish Mehra, Atul Rungta, Abhinav Golas, Ming Lin, Dinesh Manocha

Abstract: We present an interactive wave-based sound propagation system that generates accurate, realistic sound in virtual environments for dynamic (moving) sources and listeners. We propose a novel algorithm to accurately solve the wave equation for dynamic sources and listeners using a combination of precomputation techniques and GPU-based runtime evaluation. Our system can handle large environments typically used in VR applications, compute spatial sound corresponding to listener's motion (including head tracking) and handle both omnidirectional and directional sources, all at interactive rates. As compared to prior wave-based techniques applied to large scenes with moving sources, we observe significant improvement in runtime memory. The overall sound-propagation and rendering system has been integrated with the Half-Life 2 game engine, Oculus-Rift head-mounted display, and the Xbox game controller to enable users to experience high-quality acoustic effects (e.g., amplification, diffraction low-passing, high-order scattering) and spatial audio, based on their interactions in the VR application. We provide the results of preliminary user evaluations, conducted to study the impact of wave-based acoustic effects and spatial audio on users' navigation performance in virtual environments.

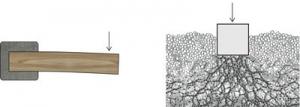

Fast physically accurate rendering of multimodal signatures of distributed fracture in heterogeneous materials Long Paper

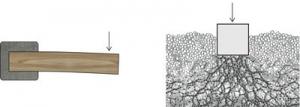

Yon Visell

Abstract: This paper proposes a fast, physically accurate method for synthesizing multimodal, acoustic and haptic, signatures of distributed fracture in quasi-brittle heterogeneous materials, such as wood, granular media, or other fiber composites. Fracture processes in these materials are challenging to simulate with existing methods, due to the prevalence of large numbers of disordered, quasi-random spatial degrees of freedom, representing the complex physical state of a sample over the geometric volume of interest. Here, I develop an algorithm for simulating such processes, building on a class of statistical lattice models of fracture that have been widely investigated in the physics literature. This algorithm is enabled through a recently published mathematical construction based on the inverse transform method of random number sampling. It yields a purely time domain stochastic jump process representing stress fluctuations in the medium. The latter can be readily extended by a mean field approximation that captures the averaged constitutive (stress-strain) behavior of the material. Numerical simulations and interactive examples demonstrate the ability of these algorithms to generate physically plausible acoustic and haptic signatures of fracture in complex, natural materials interactively at audio sampling rates.

Aggregate Constraints for Virtual Manipulation with Soft Fingers Long Paper

Anthony Talvas, Maud Marchal, Christian Duriez, Miguel A. Otaduy

Abstract: Interactive dexterous manipulation of virtual objects remains a complex challenge that requires both appropriate hand models and accurate physically-based simulation of interactions. In this paper, we propose an approach based on novel aggregate constraints for simulating dexterous grasping using soft fingers. Our approach aims at improving the computation of contact mechanics when many contact points are involved, by aggregating the multiple contact constraints into a minimal set of constraints. We also introduce a method for non-uniform pressure distribution over the contact surface, to adapt the response when touching sharp edges. We use the Coulomb-Contensou friction model to efficiently simulate tangential and torsional friction. We show through different use cases that our aggregate constraint formulation is well-suited for simulating interactively dexterous manipulation of virtual objects through soft fingers, and efficiently reduces the computation time of constraint solving.

13:30 - 14:15 | Mobile Devices

Session Chair: Ferran Argelaguet Inria, France

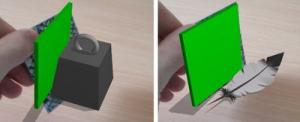

Mixed Reality Simulation with Physical Mobile Display Devices Short Paper

Mathieu Rodrigue, Drew Waranis, Tim Wood, Tobias Höllerer

Abstract:This paper presents the design and implementation of a system for simulating mixed reality in setups combining mobile devices and large backdrop displays. With a mixed reality simulator, one can perform usability studies and evaluate mixed reality systems while minimizing confounding variables. This paper describes how mobile device AR design factors can be flexibly and systematically explored without sacrificing the touch and direct unobstructed manipulation of a physical personal MR display. First, we describe general principles to consider when implementing a mixed reality simulator, enumerating design factors. Then, we present our implementation which utilizes personal mobile display devices in conjunction with a large surround-view display environment. Standing in the center of the display, a user may direct a mobile device, such as a tablet or head-mounted display, to a portion of the scene, which affords them a potentially annotated view of the area of interest. The user may employ gesture or touch screen interaction on a simulated augmented camera feed, as they typically would in video-see-through mixed reality applications. We present calibration and system performance results and illustrate our system's flexibility by presenting the design of three usability evaluation scenarios.

Object Impersonation: Towards Effective Interaction in Tablet- and HMD-Based Hybrid Virtual Environments Short Paper

Jia Wang, Robert Lindeman

Abstract: In virtual reality, hybrid virtual environment (HVE) systems provide the immersed user with multiple interactive representations of the virtual world, and can be effectively used for 3D interaction tasks with highly diverse requirements. We present a new HVE metaphor called Object Impersonation that allows the user to not only manipulate a virtual object from outside, but also become the object, and maneuver from inside. This approach blurs the line between travel and object manipulation, leading to efficient cross-task interaction in various task scenarios. Using a tablet- and HMD-based HVE system, two different designs of Object Impersonation were implemented, and compared to a traditional, non-hybrid 3D interface for three different object manipulation tasks. Results indicate improved task performance and enhanced user experience with the added orientation control from the object's point of view. However, they also revealed higher cognitive overhead to attend to both interaction contexts, especially without sufficient reference cues in the virtual environment.

Mobile User Interfaces for Efficient Verification of Holograms Short Paper

Andreas Hartl, Jens Grubert, Christian Reinbacher, Clemens Arth, Dieter Schmalstieg

Abstract: Paper documents such as passports, visas and banknotes are frequently checked by inspection of security elements. In particular, view-dependent elements such as holograms are interesting, but the expertise of individuals performing the task varies greatly. Augmented Reality systems can provide all relevant information on standard mobile devices. Hologram verification still takes long and causes considerable load for the user. We aim to address this drawback by first presenting a work flow for recording and automatic matching of hologram patches. Several user interfaces for hologram verification are presented, aiming to noticeably reduce verification time. We evaluate the most promising interfaces in a user study with prototype applications running on off-the-shelf hardware. Our results indicate that there is a significant difference in capture time between interfaces but that users do not prefer the fastest interface.

14:15 - 14:45 | AR Lighting

Session Chair: Daisuke Iwai Osaka University, Japan

Image-Space Illumination for Augmented Reality in Dynamic Environments Short Paper

Lukas Gruber, Jonathan Ventura, Dieter Schmalstieg

Abstract: We present an efficient approach for probeless light estimation and coherent rendering of Augmented Reality in dynamic scenes. This approach can handle dynamically changing scene geometry and dynamically changing light sources in real time with a single mobile RGB-D sensor and without relying on an invasive lightprobe. We jointly filter both in-view dynamic geometry and outside-view static geometry. The resulting reconstruction provides the input for efficient global illumination computation in image-space. We demonstrate that our approach can deliver state-of-the-art Augmented Reality rendering effects for scenes that are more scalable and more dynamic than previous work.

Light Field Projection for Lighting Reproduction Short Paper

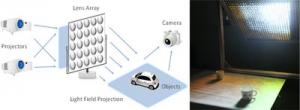

Zhong Zhou, Tao Yu, Xiaofeng Qiu, Ruigang Yang, Qinping Zhao

Abstract: We propose a novel approach to generate 4D light field in the physical world for lighting reproduction. The light field is generated by projecting lighting images on a lens array. The lens array turns the projected images into a controlled anisotropic point light source array which can simulate the light field of a real scene. In terms of acquisition, we capture an array of light probe images from a real scene, based on which an incident light field is generated. The lens array and the projectors are geometric and photometrically calibrated, and an efficient resampling algorithm is developed to turn the incident light field into the images projected onto the lens array. The reproduced illumination, which allows per-ray lighting control, can produce realistic lighting result on real objects, avoiding the complex process of geometric and material modeling. We demonstrate the effectiveness of our approach with a prototype setup.